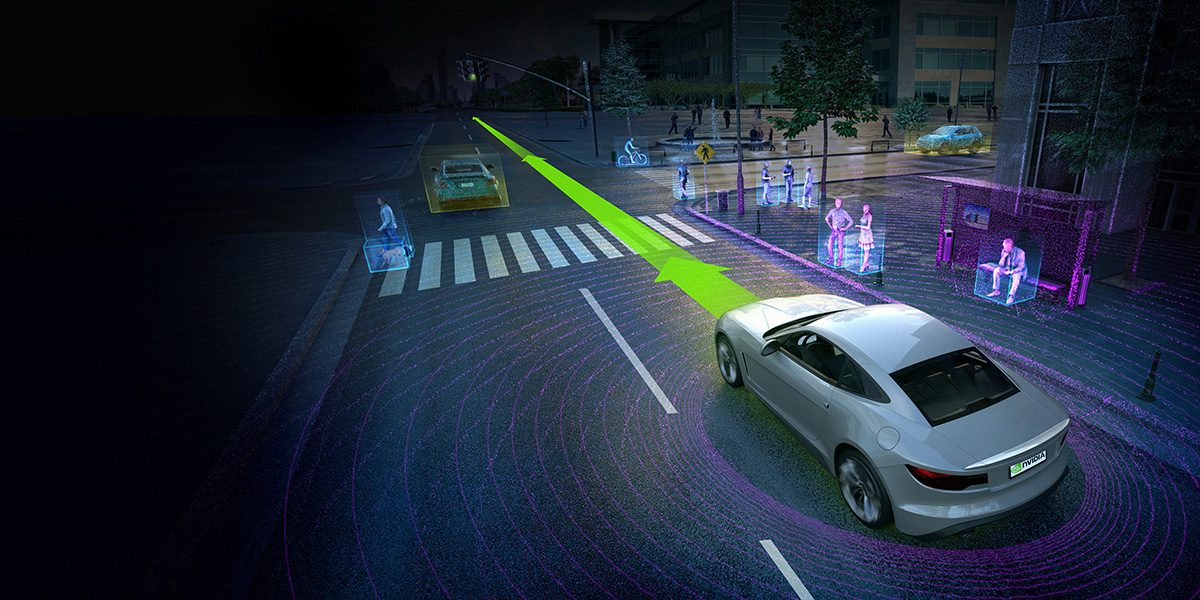

In this section we aim to be able to navigate autonomously. For that we use the images taken by the camera to find objects that need avoidance. We also use the lanes displayed by the image to stay within boundaries at all times. The detection of these features are learned through the use of the Detectron2 network, specifically their MaskRCNN model. These features are then passed into our car which uses this information to navigate autonomously with the help of ROS

We run our car manually (using a controller) across a track and keep recording images. The images can be seen on the left. We make sure to record the images at a limited frame per second so that we capture mostly distinct images to train our model. In our case the main features we want our model to detect are the cones and the lanes. The image collection and input is done with the help of ROS

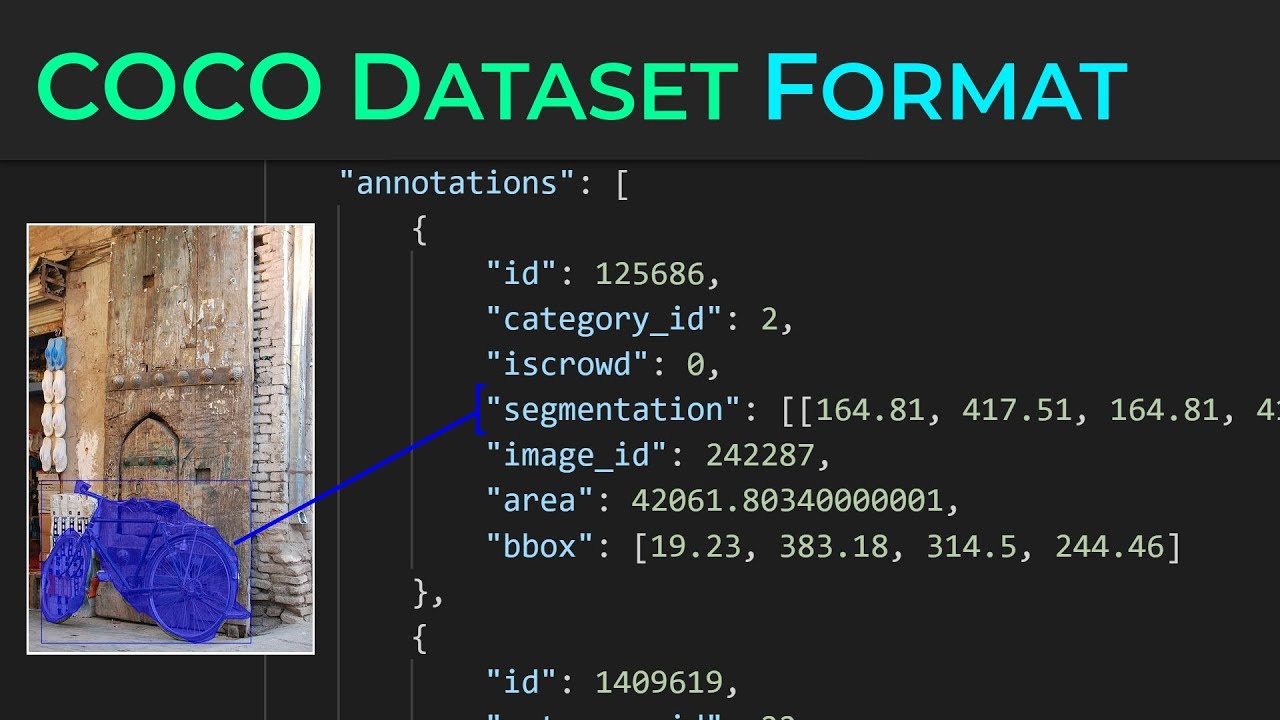

We take the images collected earlier and start labelling them manually. You can see a labelling format in the image to the right. This is the COCO JSON format. We mainly use the segmentation information so that the model can accurately detect the lanes and cones down to it's shape

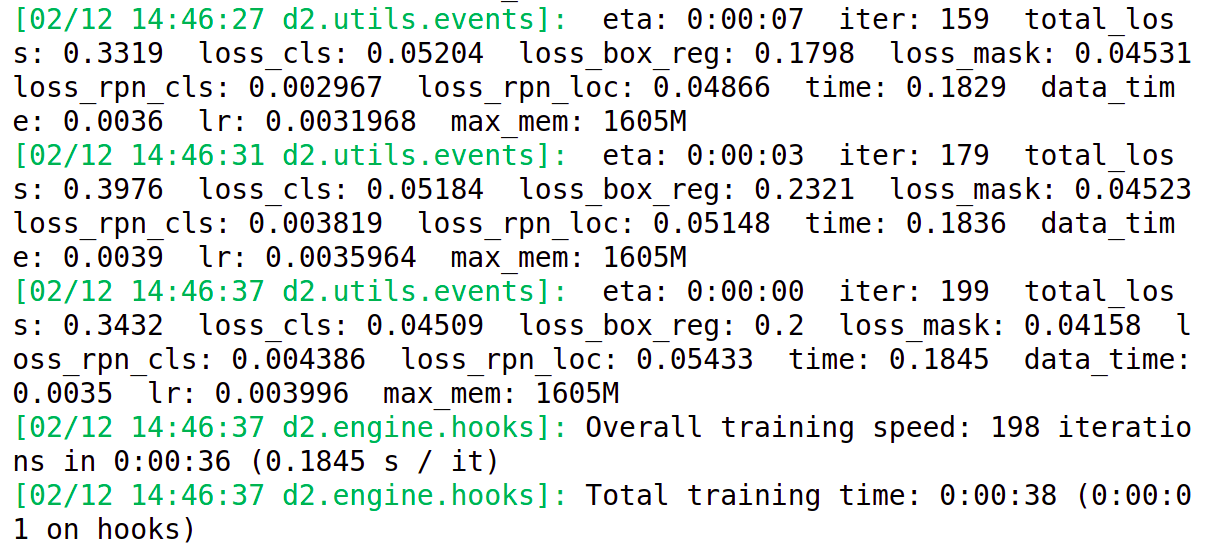

These images are now passed into a Detectron 2 MaskRCNN model for training. The MaskRCNN has already been trained on a more generalizable training data to detect objects. We are just fine tuning it to our specific use case. We try several parameters of learning rates, epochs and other useful parameters. The models are evaluated on an unknown validation data to see the generalizable performance of our models

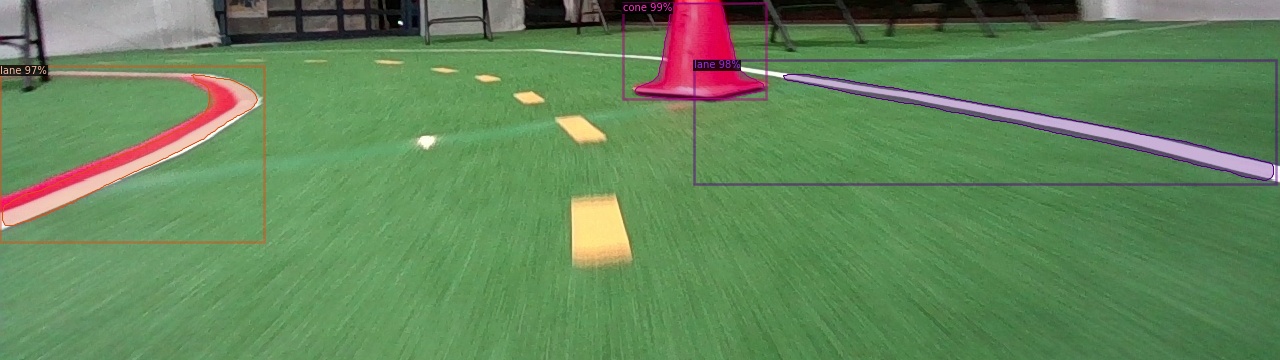

Once we know which parameters work best we use that configuration's trained model for inference. You can see how the image which we took before is now labelled with confidence levels on the cones and the lanes. We can extract these boundary boxes and masks drawn over the lane and cone and use it for navigation

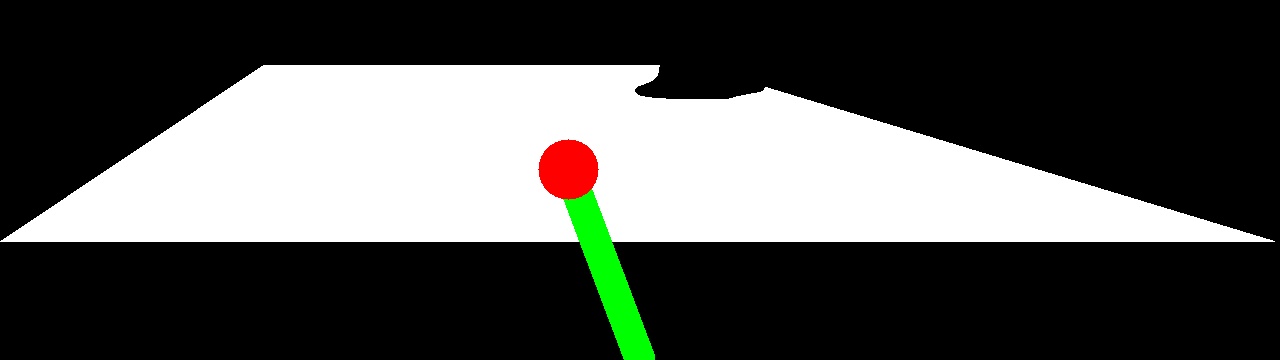

We extracted the masks and boundary boxes like mentioned in the step above. With a black and white image like this we search for the optimal point to move towards in the image (bounded by the lanes). Some images have 1 of the lanes missing. In that case we just assume that our car is far away from the missing lane and use the edges to form the white polygon you see in the left. Once we find the point to move towards we calculate a speed and steering angle which is passed into our speed controller with the help of ROS