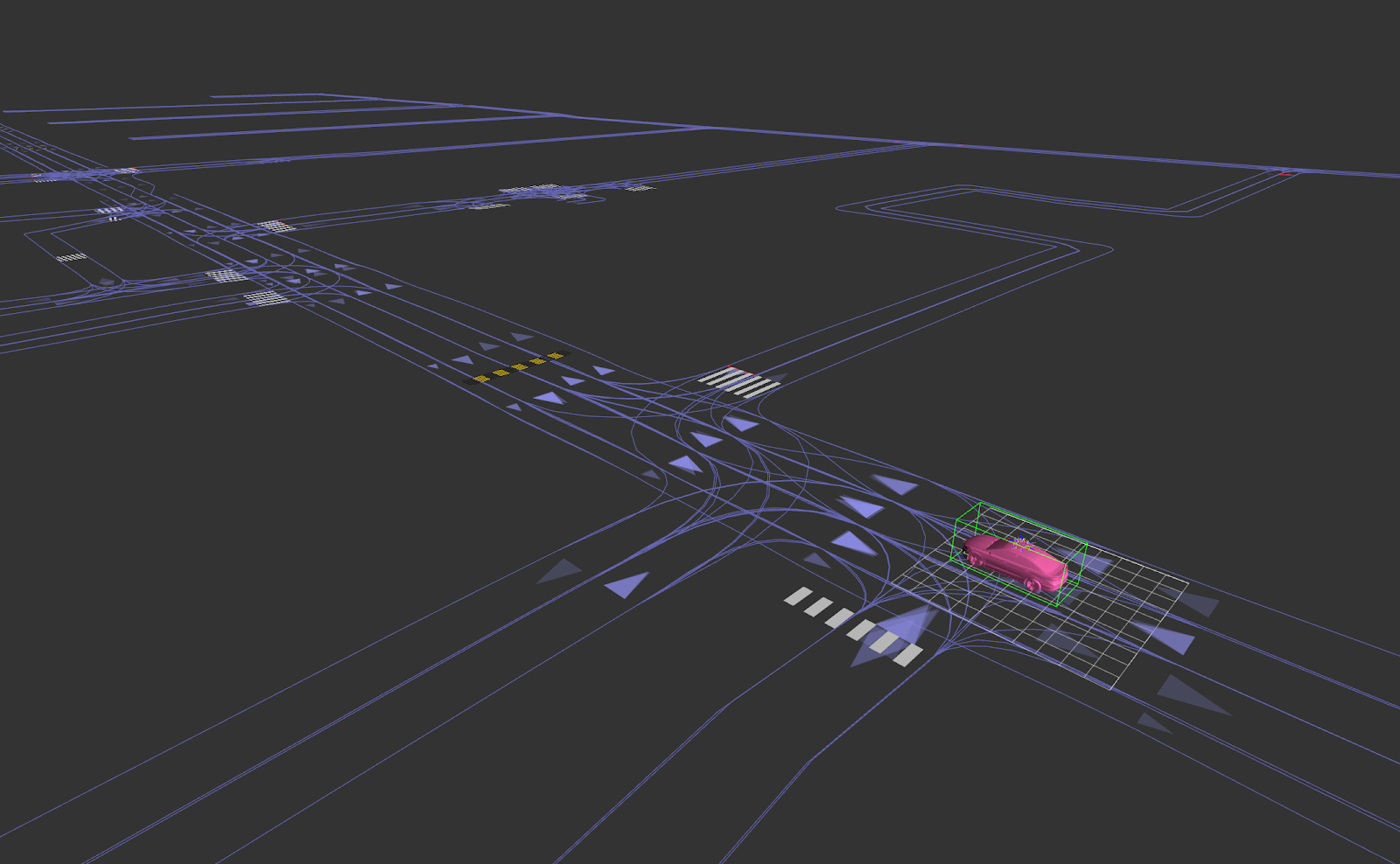

In order for the car to navigate indoors, it requires precision within a few inches. Hence, cars can’t always rely on GPS (which provide accuracy in 1-2 meters). In this section, we aim to create a map for our car. The purpose of this is to allow the car to be able to localize(find) itself within a new environment. This map is created through a process called, SLAM (Simultaneous Localization and Mapping). We are using RTABMAP, a SLAM algorithm, to process the data for map creation through ROS

We drive our car manually around the track while it receives image data from our RGB-D camera. We made sure to drive the car around slowly so that it can accurately process each image so that the car is able to map and localize simultaneously. We also used the yellow line on the track as a guide for the car to follow in order to keep experiments consistent. Consistency is key when creating these mappings. The algorithm is constantly comparing new image data to old image data, so when the car goes too fast or not following a consistent path, the car won’t be able to localize itself even if the car has been to the same relative location it has been in. The goal is to create as many “greens” as possible. This means that the car has found a match with the new image data and old image data

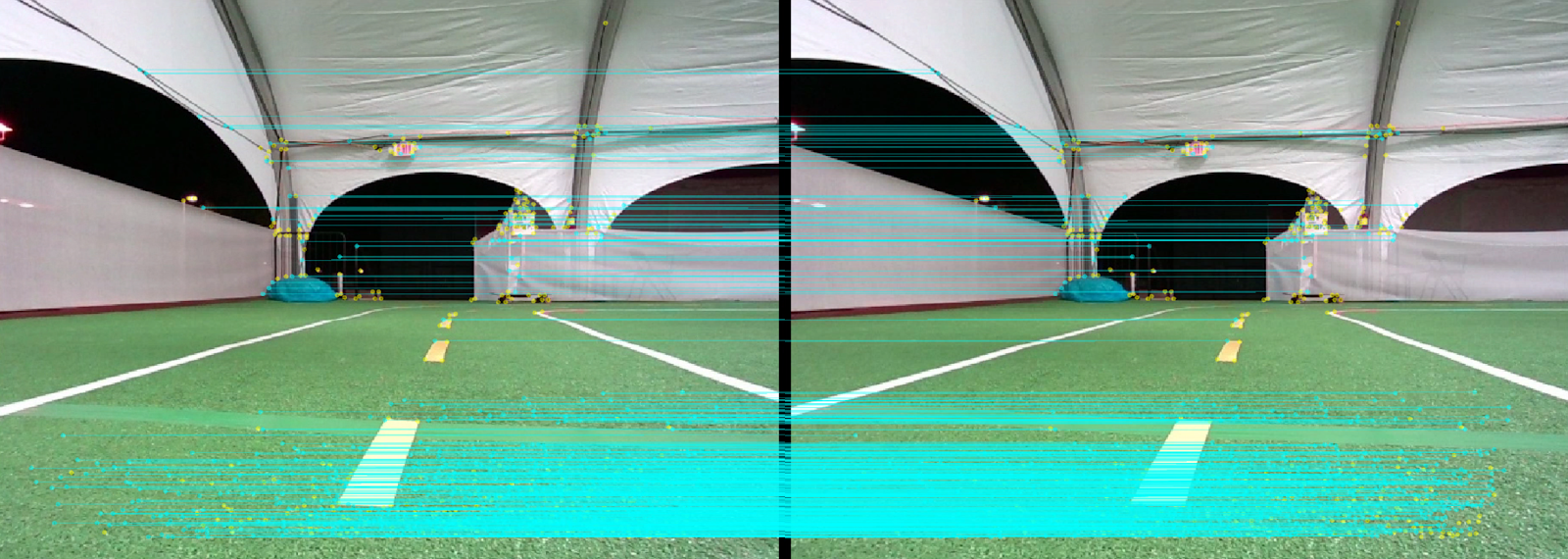

In the dataset, we have image data and position data. In each image, we are able to see key points on them, and when the algorithm is able to find a match between two points on two different images, it draws a blue line to connect the two points for a loop closure. Along with image data, we have positional data such as the x,y,z positions along with wheel rotational angles

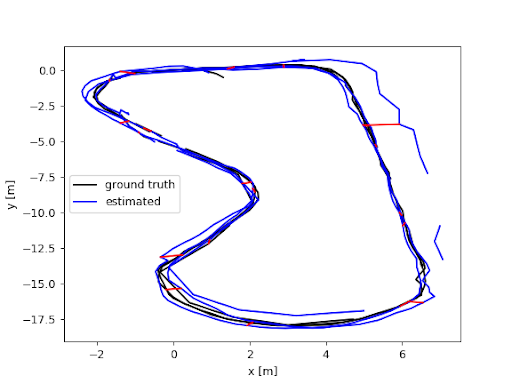

We will be using the Absolute Trajectory Error (ATE) as our main metric to evaluate our mapping. This metric measures the distance between the path created by the sensors and the true path. Using this, we’re able to see how close our mapping is to the real world

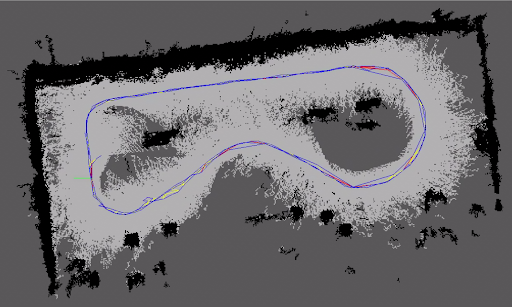

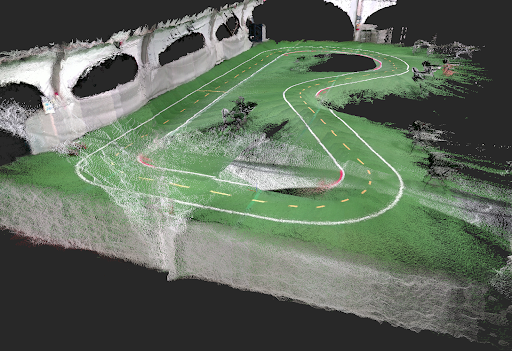

We now have a map of the environment and our car is able to properly localize itself. The following images are 2D and 3D visualizations of the track. In the 2D image, black spots are areas in which there are objects in them, white are areas where the car has seen, and the dark gray are areas in which the car hasn’t seen yet.